Crawl budget is one of those SEO terms you’ve probably heard marketing people talk about.

However, do you know what it means and what it’s all about?

Not to worry, we’re not here to test you. On the contrary, we’re here to help you understand all about crawl budget, why it’s important, and how you can check and optimize it.

After all, you’ve got to learn to crawl before you can walk, right?

What Is Crawl Budget?

Crawl budget is the number of pages Google can crawl on a website within a specific timeframe.

You see, every time you publish a page on your website, you need to wait for Google to index and rank it, and to be able to do so, its robots need to crawl it first.

This process could happen faster or slower depending on circumstances like how large your website is, how popular the topics, how much traffic there is to your page, etc.

But how does crawling actually work?

Let’s find out.

How Do Crawlers Work?

More than 10 years ago, Google realized that they have finite resources and could only find a limited percentage of the never-ending online content that’s constantly being published online.

Then, in 2017, Google published their official “What crawl budget means for Googlebot” article, in which they explain their definition of crawling, and other pertinent details.

Here are the essential facts:

- Crawling is efficient, unless your website has more than a few thousand URLs

- Quickly responding pages increase the crawl limit

- You can reduce your website crawl rate via Google Search Console

- Popular URLs tend to be crawled more often

- An abundance of low-value URLs (duplicate content, soft error pages, etc.) can have a negative impact on indexing and crawling

- Crawling is not a direct ranking factor

Basically, in order to ensure the maximum crawlability of your website, you need to have fast loading times, avoid duplicate and low-quality content, and make your pages popular.

However, it’s important to note that the majority of website owners do not need to be too concerned about crawl budget. It is large websites with thousands of pages, like eCommerce stores, digital publications, and popular blogs, that should pay attention.

Why Is Crawl Budget Important?

Crawl budget is very important to your SEO efforts, especially when you have thousands of pages and are constantly publishing a lot of new articles on a daily basis. The bots will want to crawl your new content, but they will be simultaneously revisiting the rest of your website as well.

If they encounter multiple setbacks and/or issues that confuse them, they will be stuck wasting crawl budget on old pages, instead of investing it in the new content you want them to index. This can, potentially, create delays and temporarily (or even permanently) make your URLs unavailable in the SERPs.

For example, you are publishing news or information which is important to gain visibility as fast as possible.

You wouldn’t want to wait for a week for your post to get indexed, would you?

After all, you can’t rank for a SERP if your page isn’t indexed. And let’s say you’re writing about a world event that happened today, but your page gets crawled in five days time. At that point, it’s too late to achieve your initial goal, as the event isn’t that relevant and fresh anymore. Not to mention, loads of other websites will have written about it, and gotten indexed in time.

It’s not uncommon for crawl bots to take up to a week to index your page, but to be honest, it should take less time than that.

Furthermore, meanwhile you’ll continue to post new pages that need to be crawled, indexed, and available to your target audience in a timely manner as well. And that’s a recipe for an indexing bottleneck.

All in all, if you often reach the limits of your crawl budget, you risk some of your pages slipping between the cracks and failing to make it to the SERPs. As a result, these will not benefit from organic traffic, and will have much lower visibility than the rest of your content.

And if you’re wondering how to check your crawl budget – it’s easy. Just go to Google Search Console and check Crawl → Crawl Stats.

Crawl Budget Optimization

It’s important to remember that crawl budget is dependent on three major factors: website size, website health, and website popularity.

This means you don’t need to worry about crawl budget waste, if:

- Your website is moderately small, meaning less than a few thousand pages.

- Your website is free of status code errors, and has proper canonicalization.

- Your website is popular, meaning you have developed a social media following, and other channels to share your content and generate traffic.

Covering these criteria means that Googlebot should be able to efficiently crawl your website.

In case you lack any of these three factors, there is a risk that you may experience different levels of crawling issues and lose organic search visibility for some of your pages.

Here are some crawl budget best practices to follow:

Improve Your Website Speed

As mentioned above, website speed and page loading time could affect crawl limit. A healthy, fast website means that Googlebot is able to crawl more of your pages, and in less time.

On the other hand, slower loading pages reduce the crawl rate and, in a large website, this may result in overall indexing issues.

In a nutshell, make sure to optimize your site speed. This will not only make it easier for the bots, but will improve the user experience.

Apply Internal Linking

The best case scenario is to have backlinks pointing to every page on your site. This is not only a good SEO practice for bringing in visitors to your site and showing search engines that your content matters, but it also attracts Googlebot.

Simply put, backlinks are the golden boy of SEO.

But here’s the deal.

Aside from backlinks, Google loves internal linking as well. It helps it discover all of your pages and better understand how they are related. And while backlinks may be difficult to come by, you have complete control over the internal ones and can add as many as you want (just don’t overdo it).

Applying this optimization technique will ensure that more of your pages are crawled.

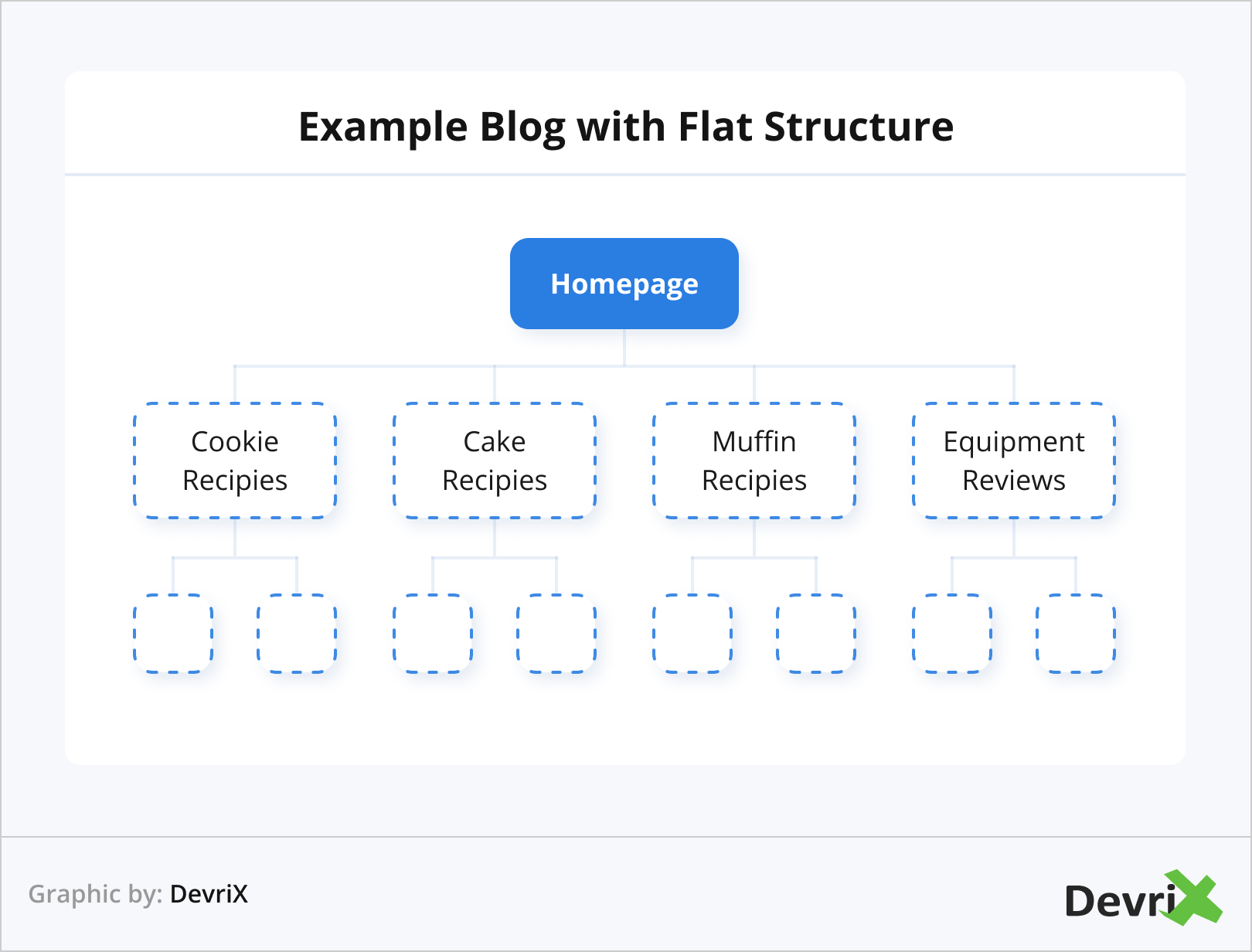

Use Flat Web Architecture

Popularity is very important in the eyes of Google. This is why it would be beneficial to use a flat website architecture. The flat architecture allows link authority to have equal proportions on all your pages.

It will help bots to follow how the links on your website are connected more easily, and additionally allows you to better group related pages and gain topical authority.

In practice, this means that both users, and search engines can reach any given page on your website within four clicks.

Avoid Orphan Pages And Duplicate Content

An orphan page is one that does not contain a single external, nor internal link. This makes these types of pages isolated from the rest of your content, and, logically, they are also harder for Googlebot to discover.

You can easily avoid orphan pages by adding at least one internal link to every article and page you have.

Manage Duplicate Content

Duplicate content causes numerous problems. Having multiple URLs with similar parameters is confusing for crawl bots. This will waste the time of Googlebot, since it’ll have to figure out which page is the main one and which is a duplicate version, and this will lead to a slower crawling process.

For sites like eCommerce platforms, where it’s common to have multiple similar URLs, due to multiple similar products with small differences, like size, and color, be sure to use canonical tags, which will guide Googlebot to the pages you want to prioritize.

Disallow Crawling For Some Pages

Every website has pages like login, contact forms, shopping carts, and so on. These do not need to be crawled, and, above that, they cannot be crawled. However, Google will try, so it’s a good idea to let the bots know that they should skip them and save resources.

To perform this action, use your robots.txt file to disallow the crawling of the pages you want.

Update Old And Write New Content

Did we mention that Google likes fresh content? It does.

Google may even stop crawling your page, due to the fact there was nothing new there the last few times it was being crawled.

Imagine, Googlebot visits your site once every two days and every time it finds new content to index. Then, all of a sudden, for some reason, you stop updating your website. However, Googlebot continues to visit it with the same frequency. After a while, the bot notices there is no new content to index and, eventually, makes the visits less frequent.

In the opposite scenario, where you start to provide fresh content more often, Googlebot could start visiting your website more often.

Generally, when establishing which pages need to be updated observe the relevancy – an article could be two-years old and still have applicable information (you should still update it, though). Or it could be a month-old material that has already lost its freshness.

Reduce Error Pages

This one is straightforward to understand.

Googlebot wastes time when it’s trying to crawl pages with 5xx error codes (server error), 4xx error codes (cannot be found error), 3xx error codes (redirects), etc., and as a result, this lowers your crawl rate limit.

Basically, every page that results in a code different from 200 is a waste of time and crawl resources. In practice, there is no need to direct Google’s attention to pages you’ve deleted or redirected. Instead, prioritize fixing your live URLs.

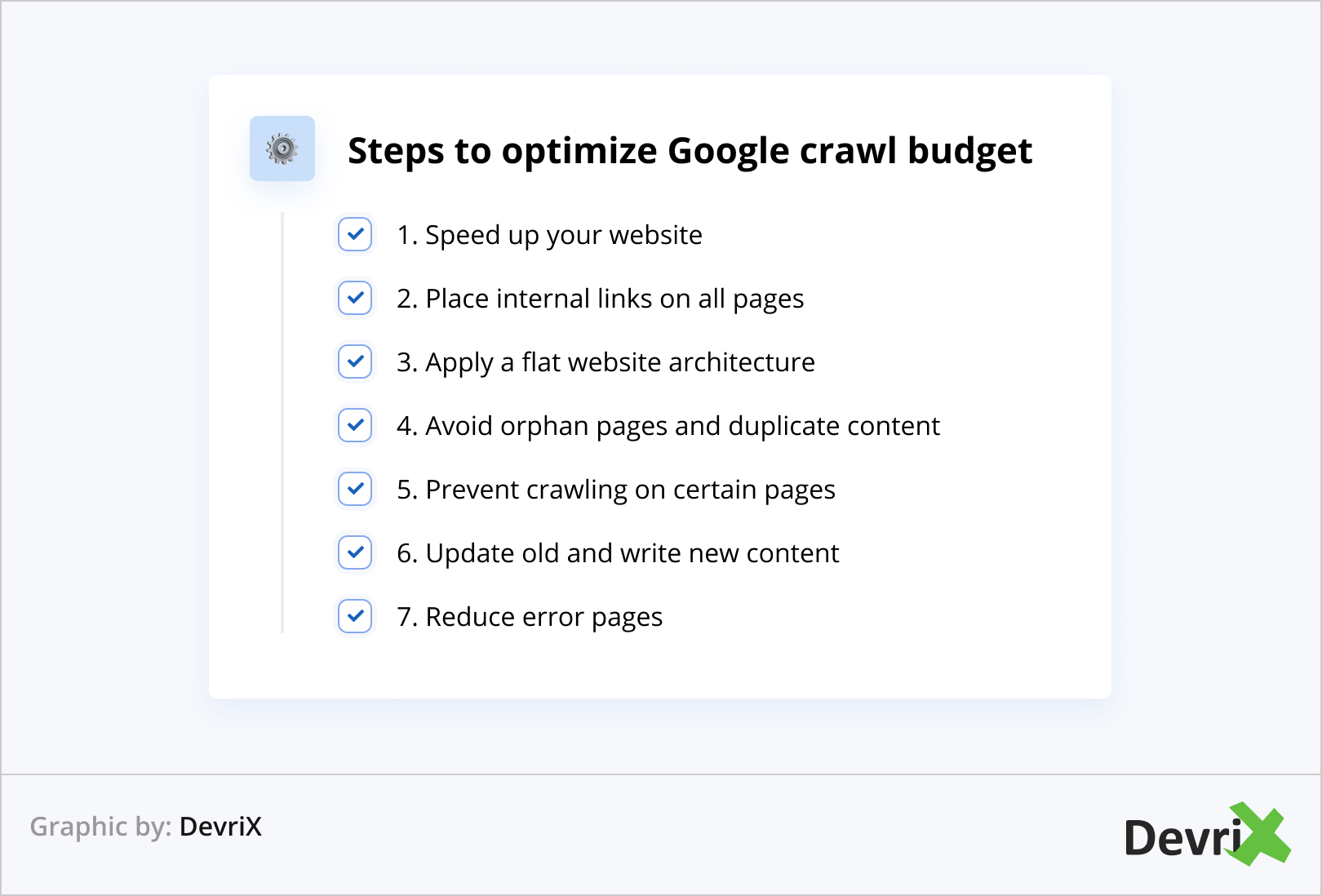

Crawl Budget Optimization in a Nutshell

Let’s recap the steps to optimize Google crawl budget:

- Speed up your website

- Place internal links on all pages

- Apply a flat website architecture

- Avoid orphan pages and duplicate content

- Prevent crawling on certain pages

- Update old and write new content

- Reduce error pages

Bonus Tip

You can check how frequently your website is being crawled by performing a log file analysis. This way, you can determine if certain pages are being crawled more often than others.

In addition, a log file analysis can help you understand whether there are issues in specific areas of the website.

In addition, a log file analysis can help you understand whether there are issues in specific areas of the website.

Bottom Line

Crawl budget is essential for the overall search engine visibility of your website. If Googlebot cannot crawl and index your pages, you practically don’t exist online.

Make sure to apply all the crawl budget optimization techniques we discussed in the article, and you will reap the rewards.

Google will be able to find and index your content faster, and, ultimately, your website will be ahead of the competition that fails or neglects to implement the optimization.

1:0 for you! Good job!